Before Christmas, I began reading Peter Carruthers’ “The Architecture of the Mind: Massive Modularity and the Flexibility of Thought”. It’s thorough and well argued, but for a couple of reasons, I started to lose patience with it about a third through, and it’s uncertain if I’ll finish. Nevertheless, it’s got me thinking hard about some of the issues for the first time in years. I have long been persuaded by Noam Chomsky’s argument for the existence of a specific, genetically endowed language faculty, but wondered how much it would generalise outside language. Carruthers has been a leading advocate of the perspective that applies Chomsky’s core insight broadly across diverse mental tasks. Opposing schools of thought, like empiricism or behaviourism, usually reject the idea of such highly specific, innately encoded mental structures. They hold that much of human mental performance can be explained by the effectiveness of one domain-general learning and reasoning mechanism. This latter perspective is favoured at the moment by many in the field of artificial intelligence, and is often associated with the “connectionist” approach to cognitive science (unfairly, in my view).

Carruthers makes the case that “massive modularity” of the mind is something we might expect from simple comparative and evolutionary biology considerations. Biological systems exhibiting complex functional characteristics invariably also exhibit a hierarchical, modular organisation. As the mind seems to correspond to a biological system, and plainly exhibits complex functional characteristics, it is natural to suppose that the mind should exhibit hierarchical modular organisation. Carruthers musters various evolutionary biology and system design arguments as to why modularity is a sensible strategy, and therefore why we should expect to see it in the mind, as in other complex systems. For my part, Carruthers gets rather too deep in the weeds of evolutionary arguments, and expressing a seemingly strong desire to defend “evolutionary psychology” (writ large) against critics. I think it suffices to make a prima facie case that the mind should behave like other complex biological systems, without putting up arguments for the empirical success of various other irrelevant theories in evolutionary psychology (or as he misleadingly calls them, “predictions”).

Establishing that the “mind”, at some level of abstraction, exhibits functional modularity does not tell us much. It is an empirical fact that the brain exhibits modularity, and one assumes there’s at least partial correspondence between the brain and mind. The critical questions for cognitive science and philosophy of mind concern the degree to which mental activities of interest are governed by one or many mechanisms (I sometimes think of this parameter as “task-level modularity”). For example, it is uncontroversial that the musculoskeletal system is highly modular, but no comment follows from this concerning the number of modules that are recruited to a particular physical task, or which sets of modules are recruited in common between distinct tasks. A physical task such as freestyle wrestling is certainly more “multi-modular” in our sense than, say, bicep curl exercises, and yet generic arguments for massive modularity do not address anything in this conceptual space (are mental tasks we are interested in recruiting just one module, or more?).

So, there is plenty of room for a non-modular account of mental activities over an interesting domain, even accepting the basic premise of “massive modularity”. A behaviourist/empiricist account would note that there clearly exist something like generic learning mechanisms in animals, including humans: fairly arbitrary behaviours can be learned through operant conditioning. Furthermore, there’s no strict theoretical impossibility of domain general learning mechanisms. Machine learning techniques such as gradient descent or genetic algorithms do not require pre-programmed biases in order to learn all manner of functions1. Domain general learning abilities would seem likely to confer evolutionary advantages, so one might suppose, according to Carruthers-style arguments, that at least one of the mind’s modules could exist for that purpose. It would then remain an open question which of the mental capabilities of interest should be explained by the activity of such a module.

There are numerous arguments that speak to this question. I don’t intend to review them all here and now — I’ll probably come back to this topic. One of the weaker arguments, though, in my opinion, is the argument from the g factor, or “general intelligence”. The g factor is what emerges from a statistical factor analysis of the correlation between various tests of mental ability, and this is purported to explain a large fraction of the variance in performance across tests. This somewhat cuts against a kind of simplistic mental modularity account: one might imagine that the mind is made up of separate modules for mathematical reasoning, verbal reasoning, social reasoning, etc, and that performance in these domains would be mostly uncorrelated. The strong correlation among tests in these areas, which the g factor substantially captures, undermines this expectation. However, the emergence of robust factor from factor analysis really tells us very little about the underlying causal structure.

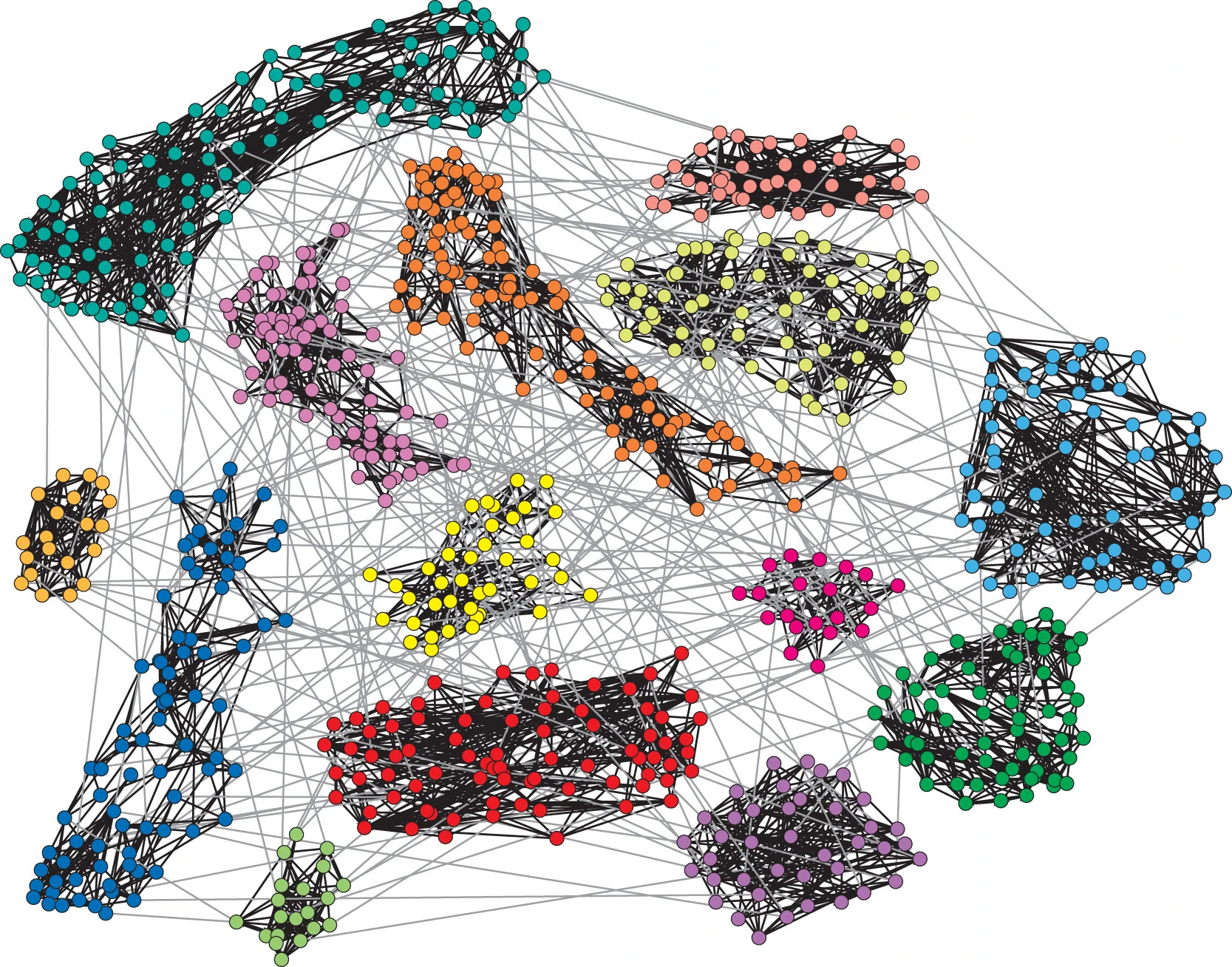

One thing advocates of this sort of view are apt to forget is that a factor is nothing other than a weighted sum. But a weighted sum is necessarily decomposable into sub-components! So what can we say about “modules”? The sub-components of a factor also need not be intercorrelated (let alone causally related) for the factor to capture a bunch of statistical variation. All that matters is that each of the components contribute in some degree to performance in most of the tests being considered together. In my opinion, this was demonstrated most elegantly by Cosma Shalizi on his blog some years ago. Using simulations, he shows that even 1000 completely uncorrelated variables can, when randomly combined, produce a robust common factor. It isn’t quite the knockdown argument against “monolithic g” I suspect Cosma believes it to be (for reasons I can explore down the line), but it falsifies certain common misconceptions.

To sharpen the point, it might help to consider something a little more familiar. My go-tos in this regard are usually parameters of physical ability, which most of us have some strong intuitions about. I’d venture, for example, that there likely exists some linear combination of fitness-relevant measurements (height, lean mass ratio, arm strength, \(VO^2_{max}\), vertical leap, etc) that would effectively predict performance across a range of real-world athletics/sports benchmarks (rowing, running, climbing, etc), at least among untrained people. Clearly it would not follow from this that athleticism/sports physiology abilities can all be explained by one common mechanism! If it turned out that rowing, running, and climbing ability were reasonably well correlated among the general population, we need not conclude that there is a “general athleticism” module somewhere in the body. We could instead conclude that a variety of overlapping physiological modules contribute to greater or lessers degrees to performance in each of these activities. The same thought is available as we discuss g factor.

There is plenty more to say about g in this connection, but I will stow it for now. I will inevitably return to the discussion around “intelligence” more broadly, and what can really be said about such a notion in abstract. But back on the topic of modularity, obviously, I haven’t advanced a positive account for it concerning the “mental activities of interest” I earlier claimed to be such desideratum. I can’t pretend to have an account like that to hand. But I’d submit that we can safely dispense with at least one rather common argument against it.

Footnotes

This, I think, explains the popularity of behaviourism among ML-adjacent types. Much to say here, but one brief point about data curation: ML algorithms are typically fed large amounts relatively structured data which strongly encourage the learning of the desired data generating process. Data curation in this manner is a form of inductive biasing, albeit one carried out by ML professionals, upstream of the learning algorithm itself. Chomsky’s “poverty of the input argument”, if succesful, shows that in the case of language the unstructured linguistic data a human child is exposed to wildly underdetermines the space of possible valid grammars, which suggests the existence of an innate inductive bias. Modern large language models (LLMs) have been suggested to undermine this sort of argument, but such a claim isn’t helped by the fact that modern LLMs are trained on many orders of magnitude more (and more structured) linguistic data than human learners. Furthermore, all the promising approaches to overcome this issue concern attempts to create the right kind of inductive biases in LLM training regimes.↩︎